Before reading this article, we suggest you read our previous article on “Difference between Channels and Kernels in Deep Learning”.

Convolutional neural networks are different from other neural networks. This is because it tries to mimic human vision, which is not possible by any other neural network.

If we try to remember the layers of convolution neural networks, it includes a few convolution layers, followed by the pooling layers like max-pooling or average pooling and later fully connected layers and linear layers and so on.

In this case, we often notice that pooling layers always come after a few convolution layers.

Several questions arise - Should pooling be applied after every layer? How can we identify after which layer should it be applied?

So, in this article we will talk about pooling layers and why it is important for us to use these.

First, let us understand why we need pooling layers in CNN.

In the case of feature extraction, our network first extracts the edges and gradients. We cannot figure out how many convolution layers to add, so that important features get extracted which is important to recognize the object (if you don’t extract the edges and gradients then it’s not learning anything as object formation starts from edges and gradients).

It depends on input size and other components like the receptive field as well.

In the above image, we can see the zoomed forehead of the cat, where the blue matrix is showing the edges and gradients. After that, when we consider this on a bigger level we can see two black pixel lines, which is indicating the pattern of that cat.

So, the concept is simple: The main aim of building primary convolution layers is to extract edges and gradients to form the object. Once it satisfies the required receptive field, we often apply a pooling layer.

To understand the reason completely, we need to have a look at how humans process vision.

Consider a case, where we are standing on a road and watching the traffic (We have this insane hobby of watching traffic and getting entertained), we will notice a lot of things like vehicles, humans, trees, and much more.

However, what if we come back home and ask ourselves, which insect was making its shelter on the tree?

Will we be able to answer this? No, right? Even if we saw the insect, we didn’t bother to process it.

The same thing happens in neural networks, we apply convolution layers to extract the features. However, not all the features have a huge impact, so we try to keep only those features that dominate or have more weightage with respect to the other features. (We will come back to this)

Another reason is computation. When we process the images through convolution, we have feature maps, and often try to apply padding in the starting convolution layers, which does not decrease the size of the feature map.

So, that feature map can be computationally expensive for the whole network. Hence, after some layers, we try to help our network with the computation by applying the pooling layers. A pro tip is we can have the dilated convolution of convolution with strides instead of pooling layers but we should use it according to use case.

Now, let’s look at the bigger picture.

Let’s say our first five convolutions are currently busy with their kernels to extract features, they return us all the edges and gradients since they are the primary layers. Now we apply the pooling layers to get the overall important features.

These features show us the overall summary of edges and gradients. Now, a few more convolutions will be working on getting out the textures and patterns from these features that are just given by the pooling. This is how we can apply the logic of features extraction and then pooling.

Pooling Layers

Max-pooling: Max-pooling is one of the most popular pooling layers. 99% of networks use this layer as a pooling layer.

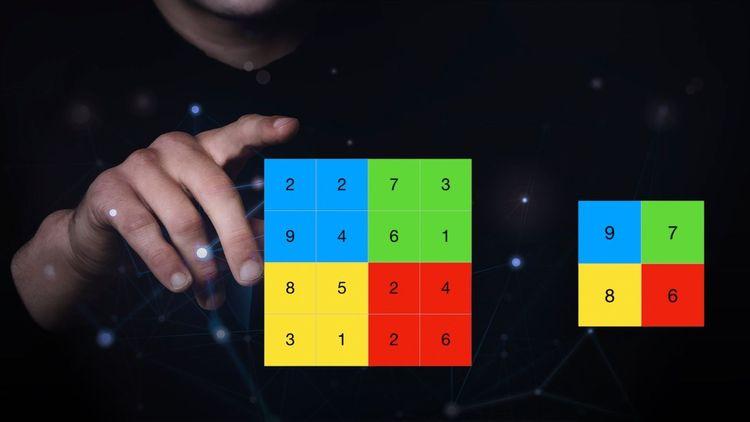

It tries to take out the maximum value among a set of values. As we can also see in the diagram.

Why is it so popular, though?

Well, our aim of applying the pooling layer is to take out the most important feature. This is where it comes and satisfies the requirement and extracts the sharpest features out of it.

Now, no one can tell us why it works so perfectly. However, after many experiments, it has been found that max-pooling works very well compared to other pooling layers. This is because it extracts the sharpest feature, which is the best representation of lower-level features in the feature map.

Average pooling: While max-pooling takes out the maximum, average pooling takes out the average from the set of values.

It is not as popular as max-pooling, but it still works fine.

In the case of average pooling, the target is to take out the smooth features, a kind of mixture of both low-level and sharp features.

Min-pooling: As the name suggests, min-pooling extracts the minimum number out of the available set of numbers. This means, it takes out the minimum pixel out of the feature map.

As we see the calculation in the diagram, it selects the minimum pixel among all pixels. In other words, it takes out the dimmest pixel or the darkest pixel.

Why only pooling(2,2)?

We have noticed it a lot of times, whenever anyone builds the network and applies it to the pool, they always use (2,2) with it. Well, the first 2 show the size of the filter and the second 2 show the stride.

Why, though? There is no hard and fast rule of choosing (2,2); but for most of the images, (2,2) works fine. The reason is size, not every dataset has exceptionally big images like 1000x1000.

The second is when we apply pooling, we manipulate features, which is a good step; but, is it that great? It is better to use a small size instead of choosing a bigger size, since max pooling will ignore the small features and will only pick the sharpest feature. We can think about the working of other pooling layers as well.

Which pooling layer should we use?

Imagine we have a black background, and all the objects available are either white or a really bright color, just like the MNIST dataset.

In this case, it is better to use max pooling. The reason is simple: the black pixel will hold the minimum number and the white pixel will hold the maximum number. Since we are taking out the maximum number, we are extracting the features of the objects, not the background.

Considering the above figure, we can see max pooling is working really fine in black background, but average pooling is smoothing the features instead of exposing them.

Now consider an option where we have a white background and black objects? Minimum pooling will be the best option. This is because, we want to ignore the white pixels, so we choose min or average pooling to extract features from the black and other dark pixels.

What about average pooling? Well, we use average pooling when we want smoother features instead of sharp or really dim features.

Now let’s consider a case where we cannot reject a big chunk of features directly. Consider the medical datasets, or security datasets, where rejecting even a single feature is a big loss. Since max-pooling will reject a lot of values and will only consider the max value, it is better to use average pooling.

Consider the image below

We can clearly see how different types of pooling are affecting the results.

So, let us dive into one of the most important concepts.

Ask yourself which is a better feature representation of 8, the images at the top or the three at the bottom?

You chose the bottom right feature, right?

Max-pooling is ignoring the empty parts in between the shapes, which shows how important it is to ignore some pixels. It is also condensing the data which is a better feature representation for the next layers.

Imagine we have a dataset of cats and dogs, are we sure every cat will be on the right side of the image, and the dog will be on the left side?

No, right?

Let’s consider another example; an apple can be on a tree or can be with the seller, again the position of the object doesn’t matter. In the end, an apple is an apple.

The images below perfectly describe how max-pooling helps in the small position shift of pixels of an object.

Notice how the background has been minimized by max-pooling and only the important features remain in the first two diagrams. However, in the third diagram, we can see it kept the wide line as wide as possible, which also shows the importance of the feature.

Now let’s consider the above diagrams. We can clearly see the small shifts of features are ignored by the pooling. However, it was not able to handle it in the last diagram since the shift was not small.

This diagram describes how well it is handling the shift of features in the first two diagrams, and giving the same output even when the features are holding a different position.

Assuming we pass this dataset to the network, it will notice a lot of things, including the position of objects; but, does it really matter?

The position does not decide what is the object; the feature decides it. So, we do not want our network to learn those things.

In the end, pooling is just a tool in our toolbox. It is up to us whether we want to apply it or not, but we should make sure of the position and the pooling layer to which it should be applied.

If you are interested in learning more about Convolutional Neural Networks and aim to become a Data Scientist, join AlmaBetter’s Full Stack Data Science program. They offer a 100% placement guarantee with jobs that pay Rs. 5-25 LPA. Enroll today!

Read our recent blog “An Intuition behind Computer Vision”.