Picture this —

You’ve spent weeks chatting with your AI fitness coach about your goals: you run every morning, you prefer yoga on weekends, and you’ve proudly cut down on sugar.

But one day, it cheerfully recommends a “bulk-up plan” with high-calorie shakes and zero cardio.

Wait, what?

That’s not just inconvenient — it’s a context breakdown.

Now imagine if your smart car forgot your favorite route, or your customer support bot made you repeat your issue every single time.

In a world where AI is expected to be smart, helpful, and personalized, forgetting basic preferences breaks trust. This is where Context Engineering becomes essential. It’s the discipline that ensures AI systems don’t just respond—they remember, adapt, and learn. Whether it’s a chatbot, a recommendation engine, or a personal tutor, context is the invisible glue that holds intelligent interactions together.

It’s how AI stops being generic — and starts being genuinely personal.

Summary

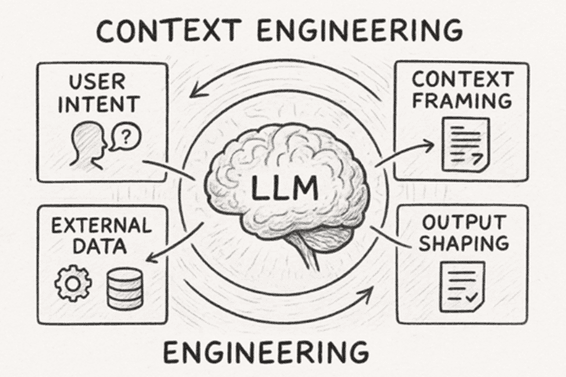

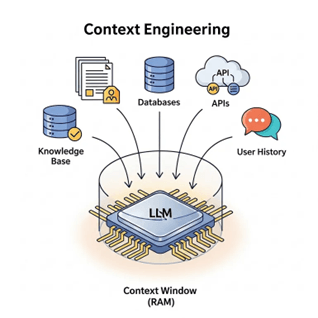

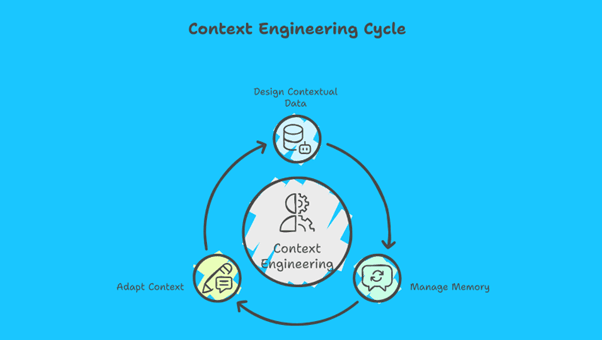

Context Engineering is the strategic design of how AI systems manage, store, and apply contextual information across interactions. Unlike prompt engineering, which focuses on crafting effective one-time inputs, context engineering ensures continuity—so AI systems can remember user preferences, past conversations, and task history.

At its core, context engineering enables AI to behave more like a thoughtful collaborator than a reactive tool. It involves building memory structures, designing context hierarchies, filtering relevant information, and integrating multimodal signals (text, voice, images) to enrich understanding.

This discipline is especially critical in applications like chatbots, recommendation engines, autonomous agents, and Retrieval-Augmented Generation (RAG) systems. By engineering context effectively, developers can reduce prompt fatigue, improve personalization, and unlock smarter, more adaptive AI behavior.

Whether you're building AI products or designing intelligent learning systems, mastering context engineering is a future-proof skill that bridges human-like intelligence with machine precision.

Table of Contents

What is Context Engineering?

Importance of Context Engineering.

Types of Context Engineering

Context vs. Prompt Engineering

Core Components

Tools and Technologies for Context Engineering

Conclusion

Additional Readings

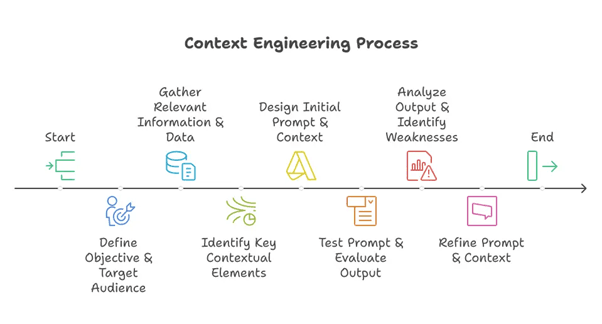

What is Context Engineering?

Context Engineering is the discipline of designing how AI systems understand, store, retrieve, and apply contextual information across interactions. It goes beyond one-shot prompts and focuses on building continuity—so AI can behave more like a thoughtful collaborator than a reactive tool.

In simpler terms, context engineering helps AI remember what happened before, understand what’s happening now, and anticipate what might come next.

Why It Matters

Without context, AI systems are like goldfish—forgetting everything after each turn. This leads to:

Repetitive prompts

Broken user experiences

Poor personalization

Inefficient task execution

With context engineering, AI can:

Recall user preferences and history

Maintain multi-turn conversations

Adapt responses based on prior inputs

Deliver smarter, more human-like interactions

What Counts as “Context”

Context can include:

User preferences (e.g., vegetarian, prefers Python)

Conversation history (e.g., previous questions or corrections)

Task progress (e.g., steps completed in a workflow)

Environmental cues (e.g., time, location, device)

Multimodal inputs (e.g., images, voice, text)

How It Works

Context engineering involves:

Memory design: What to store and how long

Context hierarchy: Session-level, user-level, global-level

Filtering and compression: Keeping only relevant details

Retrieval mechanisms: Injecting context into prompts or models

Governance: Ensuring privacy, relevance, and safety

Code Example: Context-Aware Chatbot

Input:

# Simple context storage for chatbot context = {} def respond(user_input): if "name" in user_input.lower(): context['name'] = user_input.split()[-1] return f"Nice to meet you, {context['name']}!" elif "how am I" in user_input.lower() and 'name' in context: return f"{context['name']}, you are doing great!" else: return "Tell me more!" # Example Usage print(respond("My name is Alex")) print(respond("How am I today?"))

Output:

Nice to meet you, Alex! Alex, you are doing great!

Importance of Context Engineering

In today’s world of AI-powered tools, context is not a luxury — it’s a necessity. Context engineering ensures that intelligent systems don’t just process inputs but truly understand who they’re talking to, what has already happened, and why something matters.

Below are the key reasons why context engineering plays a crucial role in building smarter, more reliable, and human-like AI systems

1. Enables Personalization

Context allows AI systems to adapt responses to individual users.Instead of generic answers, AI tailors recommendations, tones, and actions based on past interactions.

Example: Spotify analyzes your listening patterns, favorite genres, and frequently played songs to curate personalized playlists, daily mixes, and new song recommendations just for you. It even adapts suggestions over time as your preferences change.

Result: Users feel understood and valued, leading to longer engagement sessions, higher satisfaction with the platform, and stronger loyalty toward the service.

2. Builds Continuity Across Interactions

Without context, every conversation starts from zero. Context engineering ensures continuity by remembering user history, goals, and unresolved issues.

Example: A customer support chatbot recalls your earlier complaint about a delayed order, knows the steps already taken, and provides timely updates on the resolution. It can even suggest related solutions based on your previous issues, making each interaction feel connected rather than starting from scratch.

Result: Interactions feel seamless and human-like, reducing frustration and repetition. Users experience coherent, continuous support, which increases trust, satisfaction, and the likelihood of returning to the service.

3. Improves Decision Accuracy

When systems have contextual awareness — like time, location, or preferences — their decisions become far more relevant and accurate.

Example: A navigation AI considers current traffic conditions, weather, road closures, and your usual commuting preferences before suggesting the fastest or safest route. It can even adjust recommendations in real-time if conditions change, ensuring the route is optimal for your specific situation.

Result: Decisions are highly relevant, practical, and reliable. Users benefit from smarter, context-aware guidance that saves time, reduces stress, and improves overall efficiency in real-world situations.Smarter, real-world-aware decisions.

4. Reduces Cognitive Load for Users

Good context engineering means users don’t have to repeat information.AI remembers key facts so the user can focus on their goal, not re-entering data.

Example: A fitness assistant tracks your dietary restrictions, workout preferences, and past progress. When suggesting meal plans or exercise routines, it automatically filters options and tailors recommendations without asking you to re-enter the same information each time.

Result: Users experience a smoother, more intuitive interaction, reducing mental effort and frustration. This leads to higher satisfaction, efficiency, and trust in the AI system.

5. Makes AI Emotionally Intelligent

With conversational and historical context, AI can interpret tone, mood, and intent — not just words.

Example: A virtual tutor monitors a learner’s responses, pace, and engagement to detect frustration or confusion. It can adjust its explanations, encouragement, or hints accordingly, creating a supportive learning environment that feels responsive and understanding.

Result: Interactions become more empathetic and human-like. Users feel heard and supported, which enhances engagement, motivation, and trust in the AI system.

6. Strengthens Trust and Transparency

When users see that AI “remembers” and “understands” them correctly, it builds reliability.

On the other hand, forgetting basic preferences damages credibility.

Example: A digital assistant consistently remembers your time zone, language preference, and frequently used apps. Over time, it proactively offers relevant suggestions, reminders, and shortcuts, demonstrating that it truly understands your routines and needs. Conversely, forgetting these basics can frustrate users and reduce credibility.

Result: Users develop long-term trust and confidence in the system. This reliability encourages continued use, higher engagement, and stronger adoption of AI services.

Types of Context Engineering

Context Engineering can be categorized based on how context is captured, stored, and applied across AI systems. Each type serves a distinct purpose in building intelligent, adaptive behavior.

1. Session-Based Context

Session-based context refers to information that an AI retains only during a single interaction. Once the session ends, all data is discarded. It is useful for short-term tasks where continuity across sessions isn’t required. Chatbots often use this type to remember temporary inputs, like a name or query, during a single conversation. This method is simple to implement, ensures privacy, and avoids storing unnecessary long-term data, making it ideal for customer support or short-term interactive sessions.

Real-world Use Cases / Examples

Chatbots handle queries without storing personal data.

Quick feedback forms or surveys.

Customer support for one-off questions.

Python Code Example

Input:

# Session-based chatbot session_context = {} def session_bot(input_text): if "name" in input_text.lower(): session_context['name'] = input_text.split()[-1] return f"Hello, {session_context['name']}!" elif "how am I" in input_text.lower() and 'name' in session_context: return f"{session_context['name']}, you are doing great today!" else: return "Tell me more!" # Example Session print(session_bot("My name is Alex")) print(session_bot("How am I today?")) # New session simulation session_context = {} # Context reset print(session_bot("How am I today?"))

Output:

Hello, Alex! Alex, you are doing great today! Tell me more!

2. Long-Term Context

Long-term context allows AI to remember information across multiple sessions, providing continuity and personalization. It is essential for virtual assistants, recommendation systems, or any AI that interacts with users repeatedly. Information such as preferences, past queries, or prior actions is stored persistently using databases, files, or memory modules. While enhancing personalization, it requires careful handling of user data to protect privacy.

Real-world Use Cases / Examples

Siri or Alexa remembering your favorite music or daily routines.

E-commerce sites remembering your shopping history.

Health apps tracking long-term fitness goals.

Python Code Example

Input:

import json context_file = "long_term_context.json" def save_context(data): with open(context_file, 'w') as f: json.dump(data, f) def load_context(): try: with open(context_file, 'r') as f: return json.load(f) except: return {} def long_term_bot(input_text): context = load_context() if "name" in input_text.lower(): context['name'] = input_text.split()[-1] save_context(context) return f"Nice to meet you, {context['name']}!" elif "how am I" in input_text.lower() and 'name' in context: return f"{context['name']}, you are doing well!" else: return "Tell me more!" # Example Sessions print(long_term_bot("My name is Alex")) print(long_term_bot("How am I today?"))

Output:

Nice to meet you, Alex! Alex, you are doing well!

3. Domain-Specific Context

Domain-specific context tailors AI responses to a specific subject area or domain, ensuring accurate, relevant, and contextually aware outputs. This type is crucial in specialized fields like healthcare, finance, or legal systems, where general AI might fail. By encoding domain knowledge and rules, AI can provide correct recommendations, advice, or analysis without misunderstanding technical terminology.

Real-world Use Cases / Examples

Healthcare chatbots tracking patient symptoms and suggesting treatments.

Financial AI monitoring stock portfolios and providing recommendations.

Legal assistants analyzing case documents and advising on law application.

Python Code Example

Input:

# Healthcare chatbot health_context = {} def healthcare_bot(input_text): if "symptom" in input_text.lower(): health_context['symptom'] = input_text.split()[-1] return f"Recorded symptom: {health_context['symptom']}" elif "medicine" in input_text.lower(): return f"For {health_context.get('symptom', 'your symptom')}, recommended medicine is Paracetamol." else: return "Please tell your symptom." print(healthcare_bot("Symptom fever")) print(healthcare_bot("What medicine?"))

Output:

Recorded symptom: fever For fever, recommended medicine is Paracetamol.

4. User-Centric Context

User-centric context focuses on individual user preferences, behavior, and history to personalize AI interactions. This enhances engagement and relevance by considering what the user likes, dislikes, or frequently interacts with. Proper management ensures the AI is responsive to the individual without overwhelming it with unnecessary data.

Real-world Use Cases / Examples

Netflix recommending shows based on watch history.

E-commerce platforms suggesting products based on purchase history.

Personalized news feeds on social media platforms.

Python Code Example

Input:

# User-centric recommendation bot user_context = {} def recommend_bot(input_text, user_id): if user_id not in user_context: user_context[user_id] = [] if "like" in input_text.lower(): item = input_text.split()[-1] user_context[user_id].append(item) return f"Got it! You like {item}." elif "recommend" in input_text.lower(): likes = ", ".join(user_context[user_id]) return f"Since you like {likes}, we recommend similar items!" else: return "Tell me your preferences." print(recommend_bot("I like Python", "user1")) print(recommend_bot("I like Data", "user1")) print(recommend_bot("Recommend something", "user1"))

Output:

Got it! You like Python. Got it! You like Data. Since you like Python, Data, we recommend similar items!

5. External Context

External context integrates information from outside sources or environments to make AI decisions context-aware. It allows AI to adapt to dynamic, real-world conditions like weather, location, or real-time data. By combining internal and external data, AI can provide accurate, timely, and actionable outputs.

Real-world Use Cases / Examples

Weather apps recommending clothing based on forecast.

Navigation apps adjusting routes using real-time traffic data.

Smart homes control devices based on external conditions like temperature.

Python Code Example

Input:

# External context example def weather_advice(city): weather_data = {"Delhi": "rainy", "Mumbai": "sunny"} condition = weather_data.get(city, "unknown") return f"Weather in {city} is {condition}. Dress accordingly." print(weather_advice("Delhi")) print(weather_advice("Mumbai"))

Output:

Weather in Delhi is rainy. Dress accordingly. Weather in Mumbai is sunny. Dress accordingly.

6. Conversational / Dialogue Context

Conversational context allows AI to remember and reference previous user statements in multi-turn dialogues. This ensures coherent conversations, handling pronouns, follow-ups, and clarifications naturally. It is essential for chatbots, virtual assistants, and interactive tutoring systems, where maintaining flow and understanding references improves usability.

Real-world Use Cases / Examples

Travel booking assistants remembering previous destinations.

Chatbots handle multiple questions without user repetition.

Tutoring systems maintain conversation history for continuity.

Python Code Example

Input:

Python Code Example: # Conversational chatbot conversation = [] def dialogue_bot(input_text): conversation.append(input_text) if "change it" in input_text.lower() and len(conversation) > 1: last_input = conversation[-2] return f"You previously said: '{last_input}'. Updated now." else: return "Got it!" print(dialogue_bot("Book a flight to Delhi")) print(dialogue_bot("Change it to Mumbai"))

Output:

Got it! You previously said: 'Book a flight to Delhi'. Updated now.

Summary of Context Engineering Types, Use Cases, and Python Features

| Type | Use Case / Example | Python Feature Used |

|---|---|---|

| Session-Based | Chatbot for short queries | Dictionary (session memory) |

| Long-Term | Virtual assistants / recommender systems | File / Database |

| Domain-Specific | Healthcare / Finance | Domain-specific rules |

| User-Specific | Personalized recommendations | User ID + preferences dictionary |

| External | Weather / Traffic / IoT | API / External data dictionary |

| Conversational | Travel / Tutoring assistants | List storing conversation history |

Context vs. Prompt Engineering

Context Engineering focuses on designing how AI systems retain and apply information across interactions, enabling continuity and personalization. In contrast, Prompt Engineering is about crafting effective one-time inputs to guide AI responses. While prompt engineering optimizes immediate output, context engineering builds long-term intelligence and memory.

| Features | Prompt Engineering | Context Engineering |

|---|---|---|

| Focus | Single-turn input optimization | Multi-turn memory and continuity |

| Scope | One-shot or short term | Long-term, persistent across sessions |

| Goal | Precise output from a single prompt | Intelligent, adaptive behavior over time |

| Tools Used | Prompt templates, formatting techniques | Memory stores, context graph, retrieval systems |

| Common Use Cases | ChatGPT prompts, image generation | Chatbots, RAG systems, AI tutors |

| Challenges | Ambiguity, prompt length | Relevance filtering, token limits, privacy |

Core Components of Context Engineering

1. Context Hierarchy

Context Hierarchy refers to the structured layering of contextual information that an AI system uses to interpret and respond intelligently. It ensures that the system can distinguish between temporary session data, persistent user preferences, and universal domain knowledge.

Layers of Context

Session-level: Immediate interaction history (e.g., last few messages or actions)

User-level: Long-term preferences, habits, and identity markers

Global-level: Shared knowledge across all users (e.g., grammar rules, algorithm libraries)

Why It Matters

This hierarchy allows AI to prioritize relevant information, personalize responses, and maintain continuity across interactions—essential for adaptive systems like tutors, assistants, and agents.

Python Code Example

Input:

# Context Hierarchy Example context = { "session_level": {"last_question": "Explain Python loops"}, "user_level": {"preferred_language": "Python"}, "global_level": {"rules": "Always give short code examples"} } print(f"User asked: {context['session_level']['last_question']}") print(f"Responding in: {context['user_level']['preferred_language']}") print(f"Follow rule: {context['global_level']['rules']}")

Output:

User asked: Explain Python loops Responding in: Python Follow rule: Always give short code examples

Example

An AI tutor uses session-level context to answer follow-up questions, user-level context to tailor examples in Python, and global-level context to ensure curriculum alignment.

Real-World Use Case

Duolingo uses session context to track your current lesson, user context to remember your fluency level, and global context to apply grammar rules across languages.

2. Memory Design

Memory Design is the architecture that governs how an AI system stores, retrieves, and updates contextual information. It defines what gets remembered, for how long, and under what conditions.

Types of Memory

Short-term (ephemeral): Temporary data used within a session

Long-term (persistent): Stored across sessions for personalization

Selective recall: Filtering memory based on relevance or recency

Why It Matters

Effective memory design enables AI to simulate human-like recall, adapt over time, and build trust through consistent behavior. It’s the backbone of personalization and continuity.

Python Code Example

Input:

# Memory Design Example short_term_memory = {} long_term_memory = {"diet": "vegetarian"} # User interacts short_term_memory["name"] = "ALex" print(f"Hello {short_term_memory['name']}!") print(f"Noted your preference: {long_term_memory['diet']}")

Output:

Hello Alex! Noted your preference: vegetarian

Example

A chatbot remembers your name during a session (short-term), but stores your dietary preferences for future chats (long-term).

Real-World Use Case

Spotify uses long-term memory to track your listening habits and short-term memory to queue up your recent searches.

3. Filtering and Compression

Filtering and Compression are techniques used to manage the volume and relevance of contextual data. Filtering selects the most pertinent pieces of information, while compression reduces their size to fit within model constraints (e.g., token limits).

Techniques

Keyword-based filtering: Retain only context with matching terms

Semantic scoring: Rank context by relevance

Chunking and summarization: Condense long histories into compact forms

Why It Matters

These techniques ensure that only the most useful context is injected into prompts, optimizing performance and reducing noise—especially critical in large-scale or real-time systems.

Python Code Example

Input:

# Filtering & Compression Example chat_history = [ "What is AI?", "Explain machine learning.", "Tell me about cricket scores.", "How does deep learning work?" ] # Filter only AI-related queries filtered = [msg for msg in chat_history if "learning" in msg or "AI" in msg] # Compress (summarize) context summary = " | ".join(filtered) print("Filtered context:", filtered) print("Compressed summary:", summary)

Output:

Filtered context: ['What is AI?', 'Explain machine learning.', 'How does deep learning work?'] Compressed summary: What is AI? | Explain machine learning. | How does deep learning work?

Example

Before injecting context into a prompt, the system filters out irrelevant history and compresses the rest to fit within model limits.

Real-World Use Case

Google Assistant filters your calendar and emails to surface only relevant events when you ask, “What’s next today?”

4. Multimodal Integration

Multimodal Integration is the process of combining different types of inputs—text, voice, images, video, and sensor data—into a unified context stream. It allows AI systems to interpret and respond to complex, real-world scenarios.

Modalities

Text: Natural language queries and responses

Voice: Spoken commands and tone analysis

Visuals: Diagrams, screenshots, gestures

Sensor data: Location, movement, environmental cues

Why It Matters

Multimodal integration enables richer understanding and more natural interaction. It’s essential for applications like virtual assistants, autonomous agents, and educational tools that operate across formats.

Python Code Example

Input:

# Multimodal Integration Example input_text = "Explain Newton's law" input_voice = "user tone: curious" input_image = "diagram: falling apple" context = { "text": input_text, "voice": input_voice, "image": input_image } print("Integrated context received:") for key, value in context.items(): print(f"- {key}: {value}")

Output:

Integrated context received: - text: Explain Newton's law - voice: user tone: curious - image: diagram: falling apple

Example

An AI tutor receives a spoken question, a diagram, and a follow-up text—all integrated to generate a coherent response.

Real-World Use Case

Copilot Vision integrates screen content, voice input, and user history to provide contextual help while coding or browsing.

Tools and Technologies for Context Engineering

Platforms and Frameworks

LangChain: Tool and agent orchestration

LlamaIndex: Data ingestion and context-aware queries

Pinecone / Weaviate: Vector search infrastructure

GPT-4 + Tools API: Native tool calling and memory features

Integration Methods

Webhooks & APIs

Plugin frameworks (OpenAI, Claude)

Retrieval-Augmented Generation (RAG)

Best Practices

Use hybrid retrieval (structured + unstructured)

Set up memory checkpoints for long interactions

Build fallback logic to improve reliability

Conclusion

As AI evolves from static responders to intelligent collaborators, Context Engineering stands at the heart of this transformation.

It’s not just about making machines smarter — it’s about helping them remember, adapt, and respond in ways that feel human.

From chatbots and learning tutors to autonomous agents and RAG systems, context engineering fuels continuity, personalization, and trust.

By mastering its core pillars — hierarchy, memory design, filtering, and multimodal integration — you’re not just improving models; you’re shaping the future of meaningful human–AI interaction.

At AlmaBetter, we don’t just teach you to build AI — we teach you to build AI that thinks in context.

Our industry-aligned programs in Data Science, AI, and Full Stack Development empower you to create systems that remember, learn, and evolve.

With hands-on projects, expert mentors, and a pay-after-placement model, you gain real-world experience with zero financial risk.

Whether you’re exploring RAG pipelines, memory architectures, or multimodal intelligence, AlmaBetter helps you go beyond prompt engineering — to design AI that truly understands.

Join AlmaBetter today — and start building the next generation of smarter, context-aware AI.

Additional Readings

Knowledge Representation in AI

Natural Language Generation (NLG): A Comprehensive Guide

Types of Agents in AI

What is Generative AI? Exploring the Power and Potential

Best Generative AI Tools and Platforms Worth Using in 2025

Top Generative AI Use Cases and Applications in 2025

What Is Generative AI And How It Is Impacting Businesses?

Generative Adversarial Networks (GANs)