What are some of the applications of NLP?

- Grammarly, Microsoft Word, Google Docs.

- Search engines like DuckDuckGo, Google

- Voice assistants — Alexa, Siri

- News feeds- Facebook, Google News

- Translation systems — Google translate

Why text preprocessing ?

Computers are great at working with structured data like spreadsheets and database tables, but we humans usually communicate in words, not in tables. Computers couldn’t understand those. To solve this problem, we have to come up with some advanced techniques. In NLP, we use some very smart techniques that convert languages to useful information like numbers or some mathematically interpretable objects so that we could use them in ML algorithms based upon our requirements.

Machine Learning needs data in numeric form. We first need to clean the textual data and this process to prepare(or clean) text data before encoding is called text preprocessing, this is the very first step to solve the NLP problems. SpaCy, NLTK are some libraries used to make our tasks of preprocessing easier.

Steps involved in preprocessing:

Cleaning

1. Removing URL-

Importing re library to remove URL.

- Removing punctuations and numbers

Punctuation is basically the set of symbols [!”#$%&’()*+,-./:;<=>?@[]^_`{|}~]:

3. Converting all to lower case

4. Removing stopwords

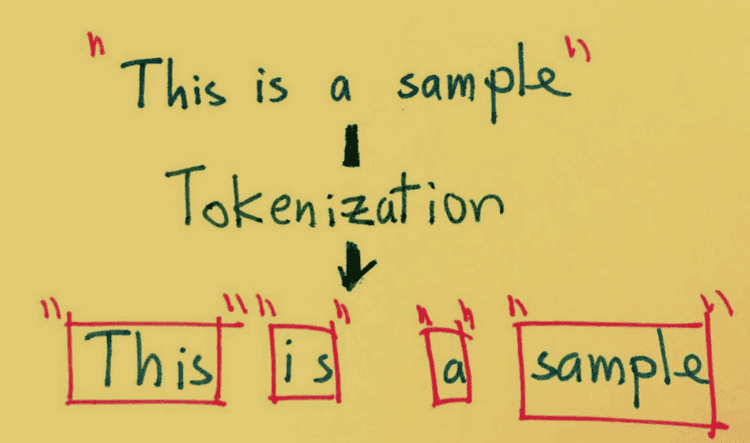

5. Tokenization-

It’s a method of splitting a string into smaller units called tokens. A token could be a punctuation, word, mathematical symbol, number etc.

6. Stemming and Lemmatization-

- Stemming algorithms work by cutting off the end or the beginning of the word, taking into account a list of common prefixes and suffixes that can be found in an inflected word. This indiscriminate cutting can be successful in some occasions, but not always, and that is why we affirm that this approach presents some limitations.

- Lemmatization, on the other hand, takes into consideration the morphological analysis of the words. To do so, it is necessary to have detailed dictionaries which the algorithm can look through to link the form back to its lemma. Look into the figure for clear picture.

7. Removing small words having length ≤2

After performing all required process in text processing there is some kind of noise is present in our corpus, so like that i am removing the words which have very short length.

8. Convert the list into string back

Now we are all set to vectorize our text.

Vectorizing

- CountVectorizer- It converts a collection of text documents to a matrix of token counts: the occurrences of tokens in each document. This implementation produces a sparse representation of the counts.

2. TF-IDF: In TF-IDF we transform a count matrix to a normalized tf: term-frequency or term-frequency times inverse document-frequency representation using TfidfTransformer. The formula that is used to compute the tf-idf for a term t of a document d in a document set is:

Note-

In CountVectorizer we only count the number of times a word appears in the document which results in biasing in favour of most frequent words. This ends up in ignoring rare words which could have helped is in processing our data more efficiently.

To overcome this , we use TfidfVectorizer .

In TfidfVectorizer we consider overall document weightage of a word. It helps us in dealing with most frequent words. Using it we can penalize them. TfidfVectorizer weights the word counts by a measure of how often they appear in the documents.

That’s all folks, Have a nice day ????