Your Success, Our Mission!

3000+ Careers Transformed.

The Limitations of ANNs

Last Updated: 2nd February, 2026Artificial Neural Networks (ANNs) are powerful tools for learning patterns in numerical and tabular data, but they are not designed to handle images effectively. The core reason lies in how ANNs process inputs. An image has spatial structure — neighboring pixels together form edges, shapes, textures, and meaningful visual patterns. However, a typical ANN does not understand this spatial relationship.

To feed an image into an ANN, we first flatten it into a long one-dimensional vector. For example, a 28 × 28 grayscale image becomes 784 individual numbers. The ANN then treats each of these 784 pixels as if they are unrelated. This completely breaks the natural structure of the image. Two pixels that are side by side in the original picture — forming a curve or an edge — become just two distant elements in a list.

This flattening leads to several problems:

- Too many parameters:

A fully connected ANN layer connecting 784 inputs to even 128 neurons requires more than 100,000 parameters. For larger images, this number explodes into millions, making training slow and expensive. - High risk of overfitting:

Because the model has so many parameters, it memorizes training data instead of learning general patterns. - Loss of spatial meaning:

The network cannot understand visual concepts like edges, corners, or textures, because all pixel relationships are lost.

This is why standard ANNs struggle with visual tasks such as classification, object detection, and recognition.

Here Comes the Magic of CNNs:

When your phone unlocks using Face ID or when Google Photos automatically groups pictures of the same person — the model behind the scenes is a Convolutional Neural Network (CNN).

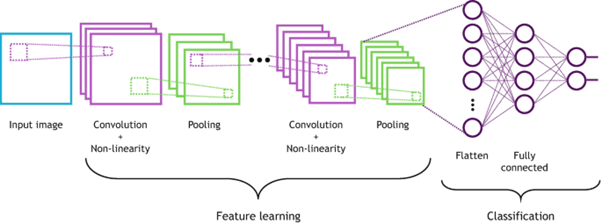

CNNs were created specifically to overcome the limitations of ANNs with visual data. Instead of flattening images, CNNs analyze them in small patches using convolution operations, allowing the network to learn spatial hierarchies:

- Early layers learn edges and simple shapes

- Middle layers learn patterns and textures

- Deeper layers understand objects and complex structures

Because CNNs preserve spatial relationships, they have become the backbone of modern computer vision — powering applications from medical imaging to autonomous vehicles.

Module 2: Inside Convolutional Neural Networks (CNNs)

Top Tutorials

Related Articles

- Courses

- Advanced Certification in Data Analytics & Gen AI Engineering

- Advanced Certification in Web Development & Gen AI System Design

- MS in Computer Science: Machine Learning and AI Engineering

- MS in Computer Science: Cloud Computing with AI System Design

- Professional Fellowship in Data Science and Agentic AI Engineering

- Professional Fellowship in Software Engineering with AI and DevOps

- Join AlmaBetter

- Sign Up

- Become A Coach

- Coach Login

- Policies

- Privacy Statement

- Terms of Use

- Contact Us

- admissions@almabetter.com

- 08046008400

- Official Address

- 4th floor, 133/2, Janardhan Towers, Residency Road, Bengaluru, Karnataka, 560025

- Communication Address

- Follow Us

© 2026 AlmaBetter